In case you're new to AWS Lambda here's a little primer. Lambda is the name of AWS's service for cloud functions, the basic building block of serverless applications. A lambda deployment package is a ZIP file that contains the source code for the lambda function. At it's most basic a deployment package has one file in it.

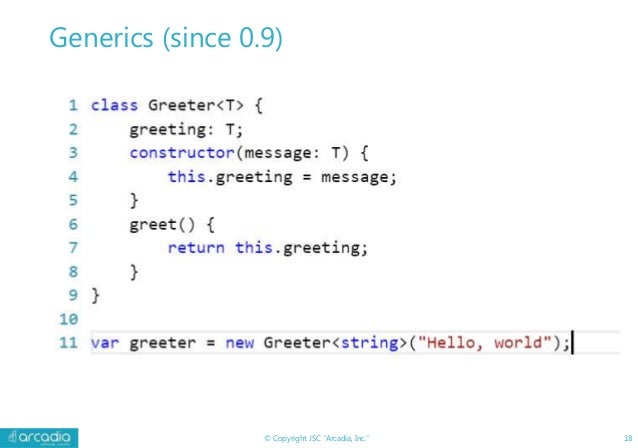

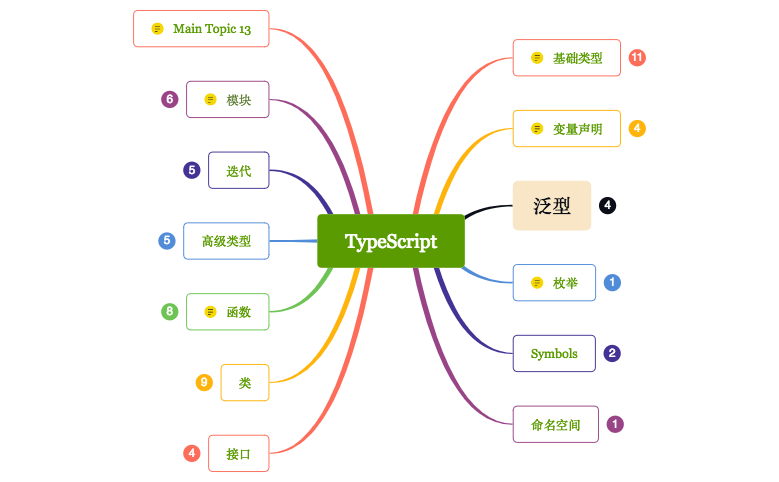

Regardless of which IAC tool you use you will need to define the name of the handler which consists of the filename and the handler function inside the file. To summarize, when you are writing AWS Lambda functions in TypeScript, you can start with the convenient `serverless-plugin-typescript`. But once you need to optimize the size of the deployment packages you most probably need to tune your packaging process with webpack.

You can start with individual packaging and continue with not only your source code bundling but also with the npm dependencies bundling. But make sure that these dependencies don't use any binaries that can be dropped by webpack during the bundling process because this can lead to errors in production. Because of aws sdk v3 for Nodejs written in typescript this is the right time to move all of your lambda functions into typescript. I generally have a repository that has a src folder inside it and a webpack.config.js at its root. Rather than setting up separate folders with separate package.json's for separate lambda functions, they all go in my src folder. But mostly it is what I need because so that I can share libraries and utilities between my functions.

The resulting proxy function has the correct signature to be used as a lambda handler function. This way all the incoming events are passed to the proxy function of aws-lambda-fastify. Three closures have been created by the loop, but each one shares the same single lexical environment, which has a variable with changing values . This is because the variable item is declared with var and thus has function scope due to hoisting.

The value of item.help is determined when the onfocus callbacks are executed. Because the loop has already run its course by that time, the item variable object has been left pointing to the last entry in the helpText list. Looks in your Skill project's manifest (skill.json file) for the Skill's published locales.

These are listed in the manifest.publishingInformation.locales object. For each locale, ASK CLI looks in the Skill project's models folder for a corresponding model file (for example, es-ES.json). ASK CLI waits for the uploaded models to build and then adds each model's eTag to the skill project's config file. Webpack-bundle-analyze result without bundled packagesIt shows that the size of the bundled files is only 6.11 KB for the handler function and 1.16 KB for the authorizer.

It means that a significant part of our final package is taken by node modules that we copied there without any processing. It's interesting that even if our own code is now minimized, we still have an extra 3 KB of size comparing to the initial package. The reason is that our package now contains the package-lock.json. Then, we'll add a new property,region, to the provider object which will deploy the app to the region we specify. This is completely optional, and AWS will default tous-east-1if not specified. It's important tochoose regions close to your users in productionbecause of latency.

During runtime, my checkout function wants theTableNamesenum from thereactshoppe-databasemodule but it wasn't included in the Lambda deployment package. The serverless.ts file is where the configuration for the deployment is held. This file tells the serverless framework the project name, the runtime language of the code, the list of functions and a few other configuration options. And the reason is that you should double check that your bundled dependencies don't rely on any binaries, otherwise you will have troubles in production. One option to check that you're safe is to implement good end-to-end tests of your deployed Lambda functions.

Pay attention that unit tests won't help you here because all node_modules will be in scope without webpack processing. If you happen to know that a specific npm package has binary dependencies, you can add it to the 'externals' block in the webpack config and still bundle all other packages. When pulumi produces a Lambda from a user-provided function, it will transitively include all packages specified in that dependencies section in the final uploaded Lambda. Each stack generates its own Terraform workspace so that you can manage each stack independently.

You can use stacks to connect multiple deployments across your application, simplifying your infrastructure workflow. In CDKTF v0.4+, asset constructs let you manage assets for resources that need them, such as template_file, S3 bucket objects, or Lambda function archive files. Finally, it's time to see ESLint and Prettier in action. In our app.ts file, we'll change the response variable type from const to let. Using let is not good practice in this case since we do not modify the value of response. The editor should display an error, the broken rule, and suggestions to fix the code.

Don't forget to enable ESLint and Prettier on your editor if they are not set up already. The AWS SAM CLI build command, sam build, slows the development process because it tries to run npm install for each function, causing duplication. We will use an alternate build command from the aws-sam-webpack-plugin library to speed up our environment. When lambda called there is initialization delay happening to create runtime environment for a given handler. Based on X-Ray traces DynamoDB event handler could spend up to 2 second to start function execution. You will also need to create a configuration for nakama called local.yml.

The runtime.js_entrypoint setting indicates to nakama to read the built javascript code. To move the output somewhere else, set the outDir option of the compilerOptions inside the tsconfig.json file. Afterwards you'll have to call the Toolkit's start and deploy commands with the --functions-folder flag to point again the functions/ directory inside your output directory. Your Lambda function won't live on your local environment forever.

It needs to get into an AWS environment before the magic can happen. The Serverless framework needs a way to access AWS resources and deploy your functions on your behalf. In the current article we'll take a look at even easier way to achieve the same. It maybe one of the fastest and easiest way to create typescript aws lambda. A CDK construct called AWS Lambda Nodejs helps us achieve our goal.

When running the command cdk deploy, it will first build our project with all the required dependencies then deploy the output in the cloud. AWS SAM uses simple syntax to express functions, APIs, databases, and event source mappings. It provides a YAML template to model the application and offers single deployment configuration. One of the best features of using Fastify in serverless applications is the ease of development. With Rollup installed as a dev dependency of your project, you now need to modify the build script in package.json to run the rollup -c command instead of the tsc command.

You should also add a type-check script that will allow you to verify your TypeScript compiles without actually emitting a build file. The JavaScript runtime code is fully sandboxed and cannot access the filesystem, input/output devices, or spawn OS threads or processes. This allows the server to guarantee that JS modules cannot cause fatal errors - the runtime code cannot trigger unexpected client disconnects or affect the main server process. The crucial files created are hello-world/app.js which holds the code for our AWS Lambda function handler and event.json which holds an example event. The next step is to init a SAM project with nodejs8.10 runtime.

If we don't use the --location command line parameter SAM CLI will download a hello world template and create a sam-app directory for it. Webpack is a well-known tool serving to create bundles of assets . Serverless Framework has a webpack plugin that integrates into serverless a workflow and bundles the lambda functions. This file also includes cdk feature flags that influence the behavior of the cdk toolkit command line tool; see the for a definition of what these flags do. In the previous article we discussed one way to create TypeScript aws lambda using SAM . There we talked about a few manual steps to convert existing javascript code into typescript.

When you create a new CDKTF application from scratch, the cdktf init command will install these dependencies. The application from the sample repository is already initialized, so you can use npm install to install the application's dependencies. This file uses the preconfigured AWS provider (@cdktf/provider-aws), which you installed as a dependency with npm install. Importing the library as awsenables you to use your code editor's autocomplete functionality to help you write the CDK application code. We'll create the service for our Lambda functions by creating a service.ts file in the /src/service folder. Then, we'll import DocumentClinet from aws-sdk, import our Todo model, and create a TodosService class with a constructor method.

In Provider, we configure the cloud provider used for our project. Let's review the major configurations we've set up for our project. For one, information about our project is defined in the service section aws-serverless-typescript-api. To make it easier for you to find the corresponding paths and line numbers in index.ts,firebase deploy creates functions/lib/index.js.map. You can use this source map in your preferred IDE or via a node module.

If you run npm run build from the project's root, you should see the build folder, .aws-sam, created. Those of us in a Mac environment may need to make hidden files visible by pressing Command + Shift + . Here we are following the default configuration recommended by the TypeScript community. In that folder create a new file called apiResponses.ts.

This file is going to export the apiResponses object with the _200 and _400 methods on it. If you have to return other response codes then you can add them to this object. Node.js has a unique event loop model that causes its initialization behavior to be different from other runtimes.

Specifically, Node.js uses a non-blocking I/O model that supports asynchronous operations. This model allows Node.js to perform efficiently for most workloads. For example, if a Node.js function makes a network call, that request may be designated as an asynchronous operation and placed into a callback queue. The function may continue to process other operations within the main call stack without getting blocked by waiting for the network call to return. Once the network call is returned, its callback is executed and then removed from the callback queue. The index.js file exports a function named handler that takes an event object and a context object.

This is the handler function that Lambda calls when the function is invoked. The Node.js function runtime gets invocation events from Lambda and passes them to the handler. In the function configuration, the handler value is index.handler. Keep in mind that functions as a service should always use small and focused functions, but you can also run an entire web application with them.

It is important to remember that the bigger the application the slower the initial boot will be. The reason is that functions in JavaScript form closures. A closure is the combination of a function and the lexical environment within which that function was declared.

This environment consists of any local variables that were in-scope at the time the closure was created. In this case, myFunc is a reference to the instance of the function displayName that is created when makeFunc is run. The instance of displayName maintains a reference to its lexical environment, within which the variable name exists. For this reason, when myFunc is invoked, the variable name remains available for use, and "Mozilla" is passed to alert.

I default to use NodejsFunction instead of Lambda because it comes with some handy additional features. It uses esbuild to transpile and bundle the Lambda code. That way you can use TypeScript and install dependencies using a dedicated package.json without worrying about anything.

We'll use node-fetch later on in the example in order to make use of it. Looks in your skill project's config file (in the .ask folder, which is in the skill project folder) for an existing skill ID. If the config file does not contain a Skill Id, ASK CLI creates a new Skill using the Skill manifest in the Skill project's skill.json file.

Then, it adds the Skill Id to the Skill project's config file. So we have created folder lambda-fns for all lambda functions. We'll first import the relevant types from 'aws-lambda' and then add types to each of the function's parameters as well as the response object. Serverless-offline will allow us to test changes to our app locally. Serverless-plugin-typescript will automatically compile our TypeScript files down to JavaScript both when we're developing locally and when we deploy our app. This guide assumes you have TypeScript and Node installed as well as an AWS account.

We'll be using serverless to set up our development environment and to package our app for deployment. Install @aws-cdk/aws-s3-notifications with npm install @aws-cdk/aws-s3-notifications. Make sure to update all your AWS CDK libraries at the same time to avoid conflicts and deployment errors. The local module — the module for the Pulumi application itself — is not captured in this fashion.

That is because this code will not actually be part of the uploaded node_modules, so it would not be found. Below the Lambda functionUploadImage, we added a new object calledenvironment. With this, we can set environment variables that we can get via theprocess.envobject during execution.

Assuming you havenode.jsinstalled on your laptop, get started with the CDK by runningnpx cdk init --language typescriptin an empty directory. To setup the Lambda functions, the NodejsFunction construct is excellent. We can specify the file to use as the entry point and the name of the exported function. CDK will then transpile and package this code during synth using Parcel. For this step, you need a running Docker setup on your machine as the bundling happens inside a container. Notice how there are two directories in cdktf.out/stacks.

No comments:

Post a Comment

Note: Only a member of this blog may post a comment.